In Part01, We installed and configured Postgresql and ETCD

In Part02, We installed and configured Patroni

In Part03, We installed and configured HAProxy & Keepalived

In this post we will use streaming replication in which Barman connects and uses a replication slot to stream WALs continuously. It's near real-time and preferred for Point-In-Time Recovery (PITR).

Streaming backup uses the pg_basebackup tool to take a live backup from the PostgreSQL primary (leader) server. The base backup files are copied: the contents of the PGDATA directory are backed up. However, to ensure continuity and recoverability, WAL archiving is also needed. In PostgreSQL, every data change is first written to a WAL file. WAL files can be collected by Barman in two ways:

Using archive_command (push model – PostgreSQL sends the WALs)

Using streaming replication (pull model – Barman fetches the WALs)

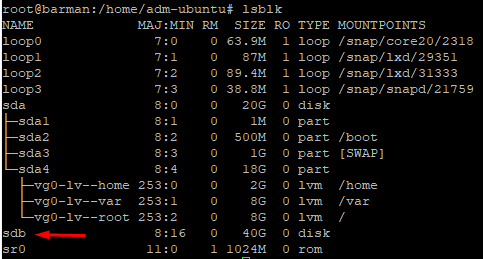

We will install and configure Barman Backup Server (192.168.1.147). Barman Server will have 2 disks. The first disk is for the OS and I formatted the first disk as XFS LVM (optional). The second disk will be ZFS . We will configure the second disk together. Barman performs backup and recovery operations using PostgreSQL’s WAL (Write-Ahead Logging) mechanism, the streaming replication feature, and the pg_basebackup tool. Without PostgreSQL, Barman cannot access the database to be backed up or retrieve the necessary data. Therefore, PostgreSQL must be installed and configured first on Barman server, and then Barman should be set up.

Install Barman and Postgres Client

On Barman Server (192.168.1.147)

sudo su

apt update

apt install -y barman

barman --version

Make sure Postgresql Client version on Barman Server matches the Postgresql version on Patroni Cluster. So I willl install version 17 now.

# Add GPG key ve repo

wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo gpg --dearmor -o /usr/share/keyrings/postgresql.gpg

echo "deb [signed-by=/usr/share/keyrings/postgresql.gpg] http://apt.postgresql.org/pub/repos/apt jammy-pgdg main" | sudo tee /etc/apt/sources.list.d/pgdg.list

# Update packages

apt update

# Install PostgreSQL 17 client

apt install postgresql-client-17

Create the ZFS disk:

Add another disk to barman server. The following command scans iscsi devices. So you will not have to reboot the server to see the new disk.

for host in /sys/class/scsi_host/*; do echo "- - -" | sudo tee $host/scan; ls /dev/sd* ; donesdb is my new disk

#install zfs utilities

apt update

apt install -y zfsutils-linux

#Create a ZFS Pool named barman-pool

zpool create -f barman-pool /dev/sdb

#Create a zfs dataset named backups under barman-pool

zfs create barman-pool/backups

chown -R barman:barman /barman-pool/backups

chmod 700 /barman-pool/backups

#Optimize ZFS dataset for Postgresql

zfs set mountpoint=/var/lib/barman barman-pool/backups

zfs set compression=lz4 barman-pool/backups

zfs set dedup=on barman-pool/backups

zfs set atime=off barman-pool/backups

zfs set recordsize=128K barman-pool/backups

zfs set primarycache=metadata barman-pool/backups

#Check if dedup and compression is applied to ZFS datset

zfs get compression,dedup barman-pool/backups

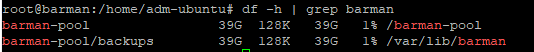

df -h | grep barman

EDIT PATRONI CONFIG FILE FOR BARMAN:

On Patroni Nodes:

nano /etc/patroni/config.yml

Add the following line into the "parameters" section in config.yml file (on all 3 patroni nodes)

parameters:

max_connections: 100

shared_buffers: 256MB

wal_level: replica

archive_mode: on

#if you want dual WAL archiving (push + stream), you can use archive command like below

archive_command: 'rsync -a %p This email address is being protected from spambots. You need JavaScript enabled to view it. :/var/lib/barman/postgres/incoming/%f'

max_wal_senders: 5

wal_keep_size: 512MB

In the same file(config.yml), in section "postgresql", add the following permission.

postgresql:

pg_hba:

- host replication replicator 127.0.0.1/32 md5

- host replication barman 192.168.1.147/32 md5

- host all barman 192.168.1.147/32 md5

- host all postgres 127.0.0.1/32 trust

- host all postgres 192.168.1.147/32 md5

- host replication replicator 127.0.0.1/32 md5

- host replication replicator 192.168.1.144/32 md5

- host replication replicator 192.168.1.145/32 md5

- host replication replicator 192.168.1.146/32 md5

- host all all 127.0.0.1/32 md5

- host all all 0.0.0.0/0 md5

Restart Patroni on each node and check who is the leader currently.

systemctl restart patroni

journalctl -u patroni –f

patronictl -c /etc/patroni/config.yml list

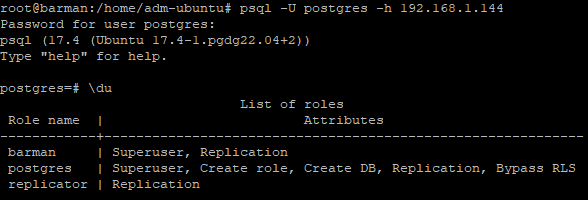

Log on to the Patroni Leader Node via SSH and run the following commands to create barman user (use your own password)

psql -U postgres -h 127.0.0.1 -c "CREATE ROLE barman WITH REPLICATION LOGIN PASSWORD 'myrepPASS123';"

psql -U postgres -h 127.0.0.1 -c " ALTER ROLE barman WITH SUPERUSER;"

#Check if the user is created

psql -U postgres -h 127.0.0.1 -c "\du"

Now go back to barman server via ssh and check if you can access to postgres and run commands from barman server:

psql -U postgres -h 192.168.1.144

On Barman Server:

Create a barman config file

nano /etc/barman.d/postgres.conf

Content of that file is as follows.

In this config, We use the leader node for base backup and replica node is used for streaming WAL segments. This removes I/O pressure from the leader and helps avoid conflicts, timeouts, or resource bottlenecks. Also When both base backup and streaming happen on the same node, The following might happen:

-Base backup can temporarily lock out streaming.

-PostgreSQL may stall WAL rotation while the backup runs

-If that happens, Barman waits forever for a WAL that’s never closed — until the backup fails

With this config we make sure:

Let the replica handle WAL archiving (which is safe and efficient)

Let the leader handle the full backup

Avoided locking/blocking issues

Result: Backup completed cleanly and quickly

[postgres]

description = "PostgreSQL 17 Replica Node"

#conninfo: For base backup (only leader allows it)

conninfo = host=192.168.1.144 port=5432 user=barman password=myrepPASS123 dbname=postgres

#uses pg_basebackup, which must connect to the primary (leader)

backup_method = postgres

#streaming_conninfo: Grabbing WAL files from replica node

streaming_conninfo = host=192.168.1.145 port=5432 user=barman password=myrepPASS123 dbname=postgres

#When streaming_archiver enabled, Barman keeps a continuous stream of WALs from the server defined in streaming_conninfo

streaming_archiver = on

slot_name = barman_slot

create_slot = auto

immediate_checkpoint = true

#Full path to PostgreSQL binaries

path_prefix = /usr/lib/postgresql/17/bin/

#Barman will delete older backups automatically when barman cron runs

retention_policy = RECOVERY WINDOW OF 30 DAYS

#Retains WAL files only if they belong to a valid backup. If retention period is over for a backup, its corresponding WALs are deleted, as well.

wal_retention_policy = main

chmod 644 /etc/barman.d/postgres.conf

And finally switch user to barman and check if everything is OK

su - barman

barman check postgres

Barman server is healthy, connected, streaming WALs, and fully operational.

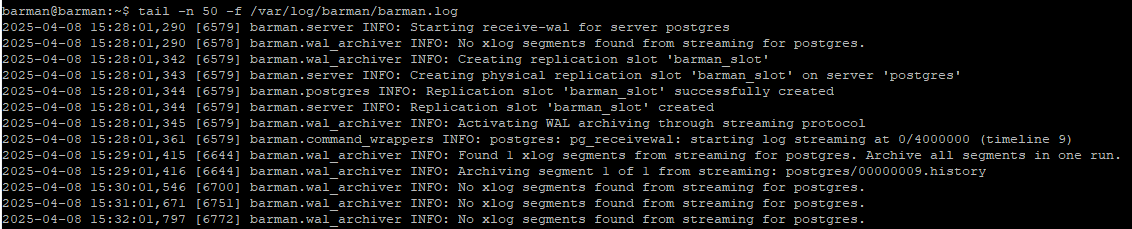

Check barman logs

tail -n 50 -f /var/log/barman/barman.logBarman WAL archiving via streaming is up and running perfectly

First Full Backup:

Optional: PostgreSQL creates 16MB WAL files. It only closes and rotates the file after it’s full. To trigger the rotation I need to fill the WAL file.

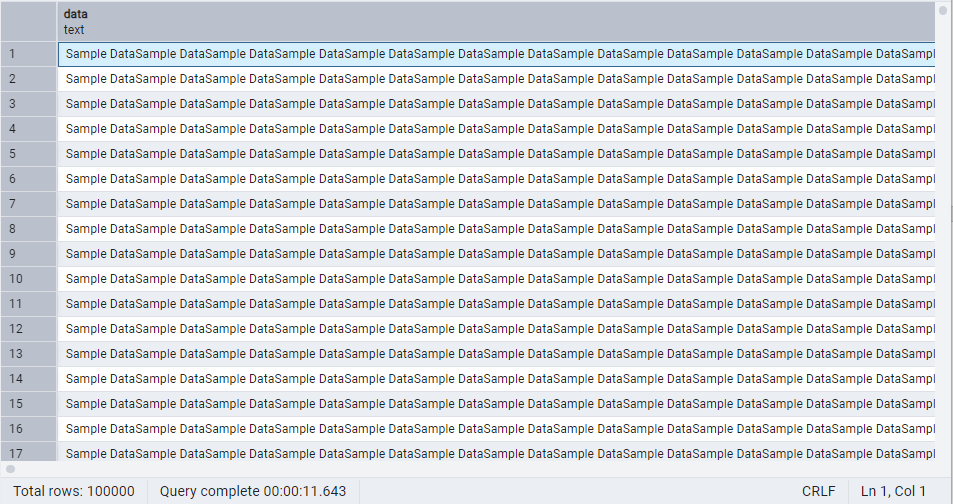

So, I will create a new huge table and generate some data (100.000 rows). Once you execute it, you can remove the first line and execute INSERT INTO as many as you can to add more data.

CREATE TABLE myWALtest(id SERIAL PRIMARY KEY, data TEXT);

INSERT INTO myWALtest(data) SELECT repeat('Sample Data', 1000) FROM generate_series(1,100000);

Let's run the first full backup

#Start a Full backup

barman backup postgres

#You should see backup files

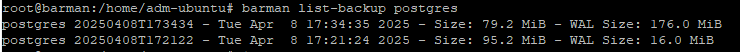

barman list-backup postgres

The following command will show the current WAL segment, DB size, #of available backup files etc

barman status postgres

The base backup completed.

Check backup files and sizeBarman has received all the WAL segments it needs. WAL size is reported (176 MiB). This means WALs were archived completely

SCHEDULED JOBS:

Let's define job scheduled task for Backup Jobs

crontab -e -u barman

#Automatically runs a full backup of the PostgreSQL server every day at 2:00 AM.

#"postgres" refers to the server name defined in your Barman config

# logs both output and errors to backup.log

0 2 * * * /usr/bin/barman backup postgres >> /var/log/barman/backup.log 2>&1

#Every 5 minutes, it runs the barman cron command which :

#Archives new WAL files

#Finalizes backups waiting for WALs

#Applies retention policies

#Cleans up expired or failed backups

*/5 * * * * /usr/bin/barman cron >> /var/log/barman/cron.log 2>&1

BACKUP RESTORE:

PITR (Point In Time Recovery) lets you restore your PostgreSQL database to a specific moment in the past(second, transaction, or WAL location (LSN)) .

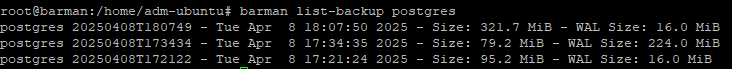

Let's first see our backup files.

root@barman:/home/adm-ubuntu# barman list-backup postgres

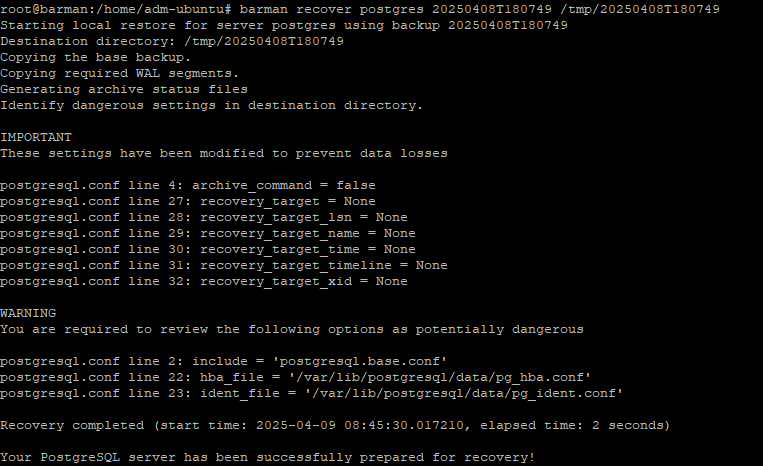

Restore the base backup to a directory on barman server(I will use the latest backup: 20250408T180749)

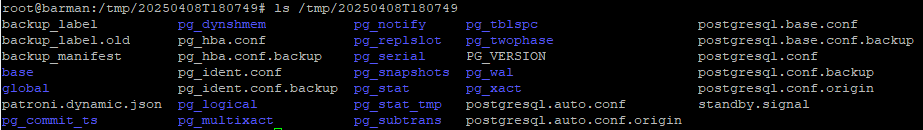

barman recover postgres 20250408T180749 /tmp/20250408T180749

You can also specify exact point to store (Point In Time Recovery)

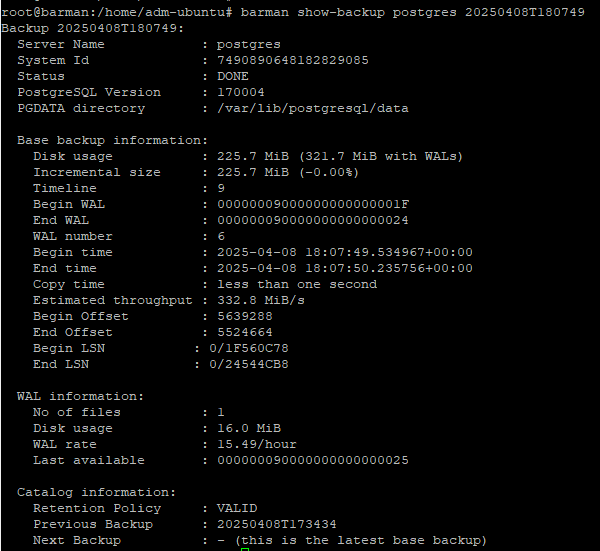

First check What Time Range is Valid for this particular Backup File:

barman show-backup postgres 20250408T180749We can only PITR to a time after the "Begin time" and up to the "End time" or latest streamed WAL.

I will just restore it to /tmp

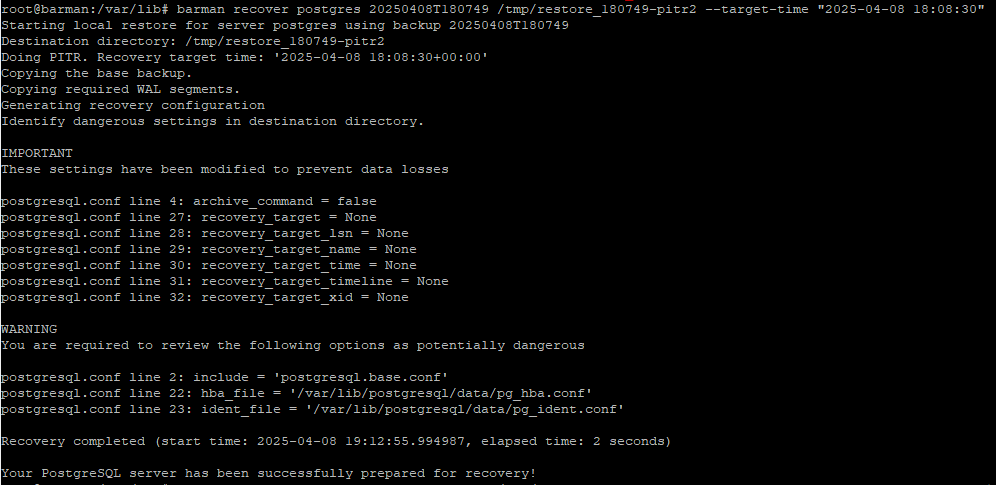

barman recover postgres 20250408T180749 /tmp/restore_180749-pitr2 --target-time "2025-04-08 18:08:30"

How to Know or Estimate a Valid Time Range:

Our output says:

Begin time 2025-04-08 18:07:49.534967

End time 2025-04-08 18:07:50.235756

Begin WAL 00000009000000000000001F

End WAL 000000090000000000000024

Last available WAL 000000090000000000000025

The base backup covers everything up to WAL segment 000000090000000000000024. We have WAL segment 25 (which came after the base backup)

Therefore, we can replay WALs beyond the base backup's End time with the help of Last available WAL (000000090000000000000025).

So, we should estimate like this:

Pick a time after END WAL 24 (18:07:50)

Soon after the backup (within 1–2 minutes). So, 18:08:30 worked in this case.

Anyways, with barman recover <servername> command, we recovered full filesystem-level restore. Simply point PostgreSQL to use this as its new data directory.

Restore Procedure For Standalone Postgres:

#Stop Postgres

systemctl stop postgresql

#Find your current data directory and rename it

mv /var/lib/postgresql/17/main /var/lib/postgresql/17/main.old

#Copy the restored files into place & set permission

cp -a /tmp/restore_180749-pitr2 /var/lib/postgresql/17/main

chown -R postgres:postgres /var/lib/postgresql/17/main

chmod 700 /var/lib/postgresql/17/main

#Start Postgres

systemctl start postgresql

#PostgreSQL will start from this new data directory — and if the standby.signal file is present, it’ll act as a standby.

#If you're recovering as a standalone instance, remove standby.signal

rm /var/lib/postgresql/17/main/standby.signal

Restore Procedure For a Patroni Node:

#Clean up any unnecessary files

rm /tmp/restore_180749-pitr2/postmaster.pid

#Ensure standby.signal exists. If it doesn't:

touch /tmp/restore_180749-pitr2/standby.signal

#Move data to Patroni’s expected location

systemctl stop patroni

sudo mv /var/lib/postgresql/17/main /var/lib/postgresql/17/main.old

sudo cp -a /tmp/restore_180749-pitr2 /var/lib/postgresql/17/main

sudo chown -R postgres:postgres /var/lib/postgresql/17/main

sudo chmod 700 /var/lib/postgresql/17/main

systemctl start patroni