In Part01, We installed and configured Postgresql and ETCD

In Part02, We installed and configured Patroni

In this part, We will install and configure HAProxy & Keepalived

The following installation will be carried out on HAProxy nodes (192.168.1.141 and 142)

Install HAProxy on both servers

sudo su

apt update

apt -y install haproxy

nano /etc/haproxy/haproxy.cfg

Paste the following config at the end of haproxy.cfg (the same on both nodes):

frontend postgres_frontend

bind *:5432

timeout client 30s

mode tcp

option tcplog

default_backend postgres_backend

backend postgres_backend

mode tcp

option tcp-check

option httpchk GET /primary # patroni provides an endpoint to check node roles

http-check expect status 200 # expect 200 for the primary node

server postgres01 192.168.1.144:5432 port 8008 check

server postgres02 192.168.1.145:5432 port 8008 check

server postgres03 192.168.1.146:5432 port 8008 check

HAProxy listens to the request on port 5432 and checks the primary backend node and then forwards the request to its primary backend node. It checks the backend nodes' health by using port 8008.

Reload HAProxy service and check if config file is valid

systemctl reload haproxy

haproxy -c -f /etc/haproxy/haproxy.cfg

Install KeepAlived on both servers:

apt update

apt install keepalived -y

nano /etc/keepalived/keepalived.conf

Node1:

global_defs {

enable_script_security

script_user keepalived_script

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 2

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # update with your nic

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 34Istanb # change alpha numeric and should be 8 char

}

virtual_ipaddress {

192.168.1.140

}

track_script {

check_haproxy

}

}

Node2:

global_defs {

enable_script_security

script_user keepalived_script

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 2

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33 # update with your nic

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 34Istanb # change

}

virtual_ipaddress {

192.168.1.140

}

track_script {

check_haproxy

}

}

Create a check script on each node. This script will check if HAProxy is up or down. If down keepalived consider its node as down and will not get requests.

nano /etc/keepalived/check_haproxy.sh#!/bin/bash

# Define the port to check (e.g., HAProxy frontend port)

PORT=5432

# Check if HAProxy is running

if ! pidof haproxy > /dev/null; then

echo "HAProxy is not running"

exit 1

fi

# Check if HAProxy is listening on the expected port

if ! ss -ltn | grep -q ":${PORT}"; then

echo "HAProxy is not listening on port ${PORT}"

exit 2

fi

# All checks passed

exit 0

We need to add a user to execute these scripts. Set ownerships and permissions. Restart keepalived.

useradd -r -s /bin/false keepalived_script

chmod +x /etc/keepalived/check_haproxy.sh

chown keepalived_script:keepalived_script /etc/keepalived/check_haproxy.sh

chmod 700 /etc/keepalived/check_haproxy.sh

systemctl restart keepalived

journalctl -u keepalived -f

Journalctl command above will show the nodes' keepalived status (MASTER or BACKUP). Also we should be able to ping 192.168.1.140 (Virtual IP) at this moment.

Data Integrity and Replication Test with PGAdmin:

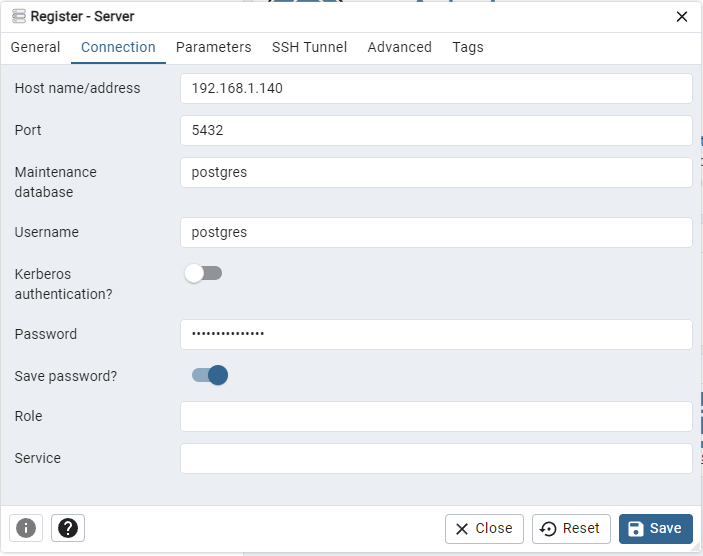

To add our Postgres cluster to PGAdmin, Right click Servers > Register > Server

We add Virtual IP as the host address. The password is the one that we defined in /etc/patroni/config.yml file.

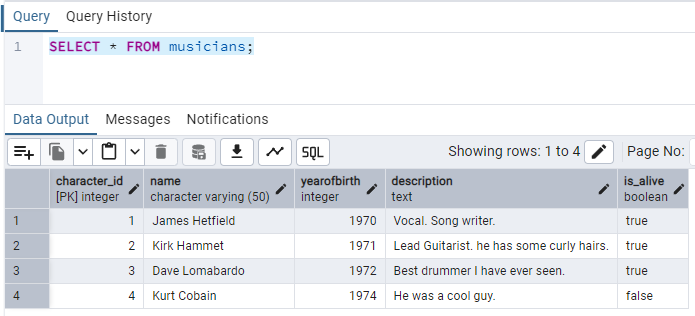

On PGAdmin > click Tools > Query Tool and paste the following SQL statement and execute.

This will create a musicians table

CREATE TABLE musicians (

character_id SERIAL PRIMARY KEY, -- Unique identifier for each musician

name VARCHAR(50) NOT NULL, -- Name of the musician

yearofbirth INT, -- Year of birth

description TEXT, -- Brief description

is_alive BOOLEAN DEFAULT TRUE -- Whether the musician is alive

);

Then INSERT some data into this table

INSERT INTO musicians (name, yearofbirth, description, is_alive)

VALUES

('James Hetfield', 1970, 'Vocal. Song writer.', TRUE),

('Kirk Hammet', 1971, 'Lead Guitarist. he has some curly hairs.', TRUE),

('Dave Lomabardo', 1972, 'Best drummer I have ever seen.', TRUE),

('Kurt Cobain', 1974, 'He was a cool guy.', FALSE)

Let's SELECT and see data from our table

SELECT * FROM musicians;

Let's see who is the current leader

etcdctl --endpoints=http://192.168.1.144:2379,http://192.168.1.145:2379,http://192.168.1.146:2379 endpoint status --write-out=tableCurrent leader is the node1.

I shutdown the ETCD Node1 and run the select statement again and I made sure the data is still available.

Now go to HAProxy Node and run the following to see who the master is and shut it down. Run the SELECT statement once more if you can reach to the database.

journalctl -u keepalived -f

At the moment, HAProxy01 is down & Postgresql Node1 is down and I can still reach to the database via Virtual IP.

Let's add some more data into our database now and then power on Postgresql Node1 to see if data is being replicated to it.

INSERT INTO musicians (name, yearofbirth, description, is_alive)

VALUES

('Bob Marley', 1960, 'Some Data Goes Here.', TRUE),

('Eric Clapton', 1971, 'Some Data Goes Here.', TRUE),

('Jon Bon Jovi', 1968, 'Some Data Goes Here.', TRUE),

('Blackie Lawless', 1963, 'Some Data Goes Here.', TRUE)

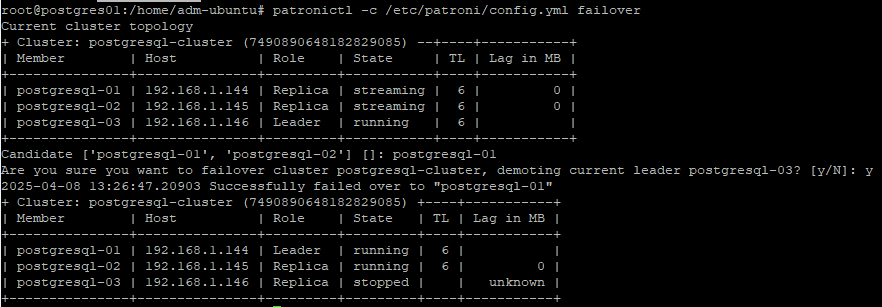

Power On Node1 and make sure the Node1 is the current leader. You can use the following command to select the leader.

patronictl -c /etc/patroni/config.yml failover

Then run the SELECT statement again and check if newly INSERTed data is available.

I can see that new data is also replicated to Node1.

In this enviroment, at least 2 Postgresql Nodes must be up and running. Otherwise, Patroni service becomes available.

Meaning that, If 1 Node remains, Quorum will be lost. Patroni can not get answer the question: "Is it safe for me to be the leader?". Patroni sees that as a "Split Brain" risk and refuse to be the leader. In Production environment, you can install ETCD service and Patroni / Postgresql service on seperate nodes.

In the next post, We will install and configure Barman Backup