Installing NGINX Ingress Controller onto K8s Cluster:

In this article, we are going to deploy an NGINX Ingress Controller using helm on our on-premises (bare-metal) Kubernetes cluster. Because K8s is on-prem setup, we will deploy ingress controller using NodePort service. NodePort works for lab or on-prem, but in production, LoadBalancer or MetalLB is often recommended.

The ingress controller adds a layer of abstraction to traffic routing, accepting traffic from outside the Kubernetes platform and load balancing it to Pods running inside the platform.

What we want to achieve:

Client sends a request to application url http://testapp1.k8s.local/

DNS or /etc/hosts resolves testapp1.k8s.local to the HAProxy + Keepalived VIP address.

HAProxy receives traffic on port 80. HAProxy frontend http_front listens on *:80. It load balances TCP traffic to the backend servers (worker01:32080, worker02:32080, worker03:32080)

Ingress Controller (NGINX) receives request on NodePort 32080. It matches the Host header (testapp1.k8s.local) and path (/) with the Ingress rule.

Ingress forwards the request to the internal Service (svc-testapp1), which selects the application Pods (httpd) on port 80. The Pod responds with “It works”, and the response flows back through the same path.

A. Add NGINX Helm Repo

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

B. Install NGINX Ingress Controller:

Because, I use Bare Metal / VM based setup for my K8s cluster; meaning it is not on cloud provider and it is a on-prem environment, I need to install ingress controller using a NodePort. So, it can be accessed from outside. The command below creates an ingress controller named myingress-controller and exposes it HTTP on port 32080 and HTTPS on port 32443.

Let you reach ingress via http://<any-worker-node-ip>:32080

kubectl create namespace ns-ingress

helm install myingress-controller ingress-nginx/ingress-nginx \

--namespace ns-ingress \

--set controller.service.type=NodePort \

--set controller.service.nodePorts.http=32080 \

--set controller.service.nodePorts.https=32443 \

--set controller.publishService.enabled=false \

--set rbac.create=true \

--set controller.serviceAccount.create=true \

--set controller.serviceAccount.name=nginx-ingress-serviceaccount

The above config first creates a namespace (ns-ingress) and installs ingress controller named "myingress-controller" using helm.

ingress-nginx/ingress-nginx is the official helm chart. We then set ingress controller service type as NodePort which means each cluster node opens specific ports to forward traffic to the Ingress Controller. We define the NodePort for HTTP as 32080 and HTTPS as 32443.

Normally, the Ingress Controller can publish itself using a LoadBalancer or ExternalName Service. Setting this to false disables that feature (--set controller.publishService.enabled=false) and it will only be exposed through NodePort. --set rbac.create=true Automatically creates RBAC (Role-Based Access Control) resources (ClusterRole, ClusterRoleBinding). This ensures the Ingress Controller has the required permissions to interact with the cluster. Finally we created a service account and the ServiceAccount is used by the Ingress Controller pods to authenticate and interact with the Kubernetes API.

Verify

kubectl get svc -n ns-ingress

We can summarize the following:

Service: myingress-controller-ingress-nginx-controller

Type: NodePort

HTTP Port: 32080

HTTPS Port: 32443

Cluster-IP: 10.102.21.171 (used internally)

EXTERNAL-IP <none> (expected in NodePort)

C. Routing traffic through HAProxy VIP to the NodePorts:

Routing client traffic through HAProxy VIP to the NodePorts on this setup is a better approach because;

- High Availability via HAProxy + Keepalived VIP

- Central entry point for HTTP(S) ingress traffic

- Easy to configure wildcard TLS, certbot, or SNI-based routing at ingress

- Great fit for on-prem / VM setups

- Decouples external exposure from internal node IPs

Log on to your haproxy nodes and add the following to the end of your /etc/haproxy/haproxy.cfg config file.

#---------#--------- Ingress Traffic ----------#----------#

frontend http_front

bind *:80

mode http

option httplog

default_backend ingress_http

backend ingress_http

mode http

balance roundrobin

option httpchk GET /healthz

http-check expect status 200

option forwardfor

server worker01 192.168.204.26:32080 check

server worker02 192.168.204.27:32080 check

server worker03 192.168.204.28:32080 check

frontend https_front

bind *:443

mode tcp

option tcplog

default_backend ingress_https

backend ingress_https

mode tcp

balance roundrobin

server worker01 192.168.204.26:32443 check

server worker02 192.168.204.27:32443 check

server worker03 192.168.204.28:32443 check

#---------#--------- Ingress Traffic ----------#----------#

systemctl reload haproxy

D. Test Ingress Access via HAProxy VIP

To verify if ingress controller is reachable from Haproxy VIP, I need to deploy a test application and expose it via ingress.

Deploy a Test App

kubectl create deployment deploy-testapp1 --image=httpd --port=80

kubectl expose deployment deploy-testapp1 --name=svc-testapp1 --port=80 --target-port=80 --type=ClusterIP

Save the following as testapp1rule.yaml.

The following file does not create pods or services. It does not open NodePort either. It only describes to which service http(s) traffic should be forwarded. Any http request that comes to testapp1.k8s.local will be forwarded to svc-testapp1 service.

(HAProxy → (NodePort 32080) → Ingress Controller → svc-testapp1:80 → pod)

For each application that serves to outside world, we need a ingress rule like this. If the domain of the applications are different from eachother for example app1.configland.com, app2.configland.com, it is better to keep ingress rule in seperate yaml files. If domain is the same for example configland.com/app1, configland.com/app2 we can keep the config in a single yaml file.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: irule-testapp1

namespace: default

spec:

ingressClassName: nginx

rules:

- host: testapp1.k8s.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-testapp1

port:

number: 80

kubectl apply -f testapp1rule.yaml

Create a DNS records for testapp1.k8s.local domain that corresponds with the HAProxyVIP or simply modify your host file (C:\Windows\System32\drivers\etc\hosts).

Scale NGINX Ingress to 3 Replicas (One Per Worker):

This tells Helm to deploy 3 replicas of the ingress controller pod under the same service.

helm upgrade myingress-controller ingress-nginx/ingress-nginx

--namespace ns-ingress

--set controller.replicaCount=3

Verify the ingress Pods:

kubectl get pods -n ns-ingress -o wide

Pick any controller pod and run the command. You should see "It works"

kubectl exec -n ns-ingress -it <nginx-ingress-pod> -- /bin/sh

curl -H "Host: testapp1.k8s.local" http://127.0.0.1

Verify

kubectl get svc

kubectl get endpoints svc-testapp1

kubectl get ingressclass

kubectl describe ingress irule-testapp1

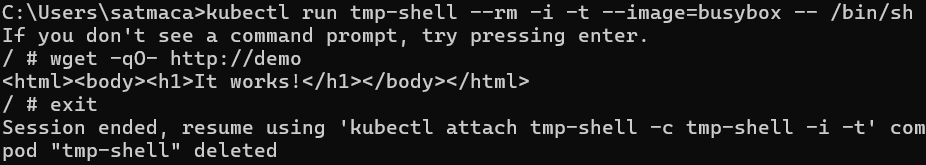

Confirms the service works inside the cluster. (You should see "It Works" like below)

kubectl run tmp-shell --rm -i -t --image=busybox -- /bin/sh

/ # wget -qO- http://svc-testapp1

Finally we can browse http://testapp1.k8s.local from our management laptop and see "It Works" page.

By deploying the NGINX Ingress Controller with NodePort access and fronting it with a highly available HAProxy + Keepalived VIP, we achieved a production-grade ingress setup for our on-premises Kubernetes cluster.

Enable HTTPS for Your Ingress (Self Signed):

Since our domain is an internal domain, we will use a self signed certificate for testapp1..

On my management laptop, I have openssl already installed. I create a self signed certificate and save it to "C:\Program Files\OpenSSL-Win64\bin\mycerts"

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout "C:\Program Files\OpenSSL-Win64\bin\mycerts\testapp1.key" -out "C:\Program Files\OpenSSL-Win64\bin\mycerts\testapp1.crt" -subj "/CN=testapp1.k8s.local/O=testapp1"

Then create a Kubernetes TLS secret

kubectl create secret tls testapp1-tls --cert="C:\Program Files\OpenSSL-Win64\bin\mycerts\testapp1.crt" --key="C:\Program Files\OpenSSL-Win64\bin\mycerts\testapp1.key" --namespace=default

Modify testapp1rule.yaml like this

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: irule-testapp1

namespace: default

spec:

ingressClassName: nginx

tls:

- hosts:

- testapp1.k8s.local

secretName: testapp1-tls # <-- match the TLS secret name

rules:

- host: testapp1.k8s.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-testapp1

port:

number: 80

Apply the updated Ingress

kubectl apply -f testapp1rule.yaml

HAProxy config is already set to support for HTTP and HTTPS. So, we do not need to change something on HAProxy config.

Because the certificate is not public but a self signed cert, it will have warnings but work over HTTPS.

We can now use this ingress controller for all our Kubernetes applications. It acts like a reverse proxy and load balancer at the edge of our cluster. It can handle:

multiple domains

path based routing such as /app1, /grafana etc.

TLS termination (wildcard or individual certs)

Shutdown and Recovery Procedure (Updated):

PodDisruptionBudget (PDB) is a Kubernetes resource that:

Defines how many pods can be down during voluntary disruptions (e.g. draining a node, shutdowns, upgrades). It protects critical workloads from too many pods being disrupted simultaneously.

When you try to gracefully shut down the cluster (or drain nodes), the PDB can prevent pods from being evicted — because it thinks the cluster is still "live" and doesn’t want to break availability guarantees. Therefore we need to modify our shutdown and recovery procedure.

To apply the desired configuration everytime we shutdown and restore the cluster, I will use the below yaml file.

# ingress-values.yaml

controller:

service:

type: NodePort

nodePorts:

http: 32080

https: 32443

externalTrafficPolicy: Local

publishService:

enabled: false

Shutdown Procedure:

On any Master Node (If you are planning to shutdown only some nodes and keep services up and running use drain commands like below. This way, pods can be moved to other nodes. If you are going to shutdown all the nodes in the cluster, you can use kubectl cordon worker01, 02, 03 command)

kubectl delete pdb -n ns-ingress -l app.kubernetes.io/name=ingress-nginx

kubectl drain worker01 --ignore-daemonsets --delete-emptydir-data

kubectl drain worker02 --ignore-daemonsets --delete-emptydir-data

kubectl drain worker03 --ignore-daemonsets --delete-emptydir-data

Shutdown Worker Nodes

Shutdown Master Nodes

Shutdown HAProxy + Keepalived Nodes

Recovery Procedure:

Again on any Master Node:

Start HAProxy + Keepalived Nodes

Power On Master Nodes

Power On Worker Nodes

#Run on any Master

kubectl uncordon worker01

kubectl uncordon worker02

kubectl uncordon worker03

#Recreate PodDisruptionBudgets (PDBs)

helm upgrade myingress-controller ingress-nginx/ingress-nginx -n ns-ingress -f ingress-values.yaml